What is Governance for AI and AI Agents?

Recently, “AI Governance” has slowly emerged as a buzzword. The term’s popularity has surged for good reason: today’s enterprises need governance in order to safely deploy AI. However, there is a bit of confusion about what governance for AI and AI agents actually is. Today, we want to dissect what governance means in this context and explain why it’s a particularly difficult challenge.

What is AI Governance?

Let’s begin by defining AI Governance. By definition, AI Governance is the set of policies, processes, and controls that ensure artificial intelligence systems—including models, applications, and agents—are developed, deployed, and managed safely and compliantly. The goal of AI Governance is to scale AI without creating security holes, violating compliance standards, or endangering the company’s reputation.

However, that’s just theory. In practice, AI Governance is about tackling some specific sub-problems. These sub-problems primarily emerged with the explosion of AI agents. Notably, AI Governance is new. It’s only tangentially covered by existing governance frameworks (e.g. SOC 2) in how it interacts with data.

The Sudden Popularity of AI Agents (and their risks)

Today, agents have won the hearts and minds of developers and users. They’re autonomous, serving as a natural extension of AI. Just like AI models, they’re highly configurable. However, they also present a nightmare for security and risk teams.

Why? Agents have two independent categories of risk, each with its own associated consequences.

- Data Risk. AI agents could display sensitive information to an employee that shouldn’t have access or have permission to see that data. This is particularly a problem for companies with data custody compliance requirements where violations might carry fees or endanger customer contracts.

- Mutation Risk. AI agents often have write access to systems. An AI agent might make a stateful update in an external system incorrectly or inappropriately (e.g. send an email, unauthorized Slack message, delete a ticket, or make a payment).

To address these, companies need a governance framework that an agent ecosystem can strictly abide by when provisioning access.

Vendors do not bear the risk. Enterprises do.

It’s important to note that the responsibility of these principles falls onto the customer, not the vendor. Vendors are rarely willing to accept the responsibility for errors in their applications (or now, agents). After all, agents can behave erratically since AI is non-deterministic, and varying prompts can result in radically different behavior. Accordingly, it is up to enterprises to determine, for any agent, how to protect themselves from the risks.

For example, there are many vendors out there that promise agents that can send emails or create Jira tickets, but naturally none will cover your legal fees if their agent accidentally publishes sensitive information to a public Jira board or sends an email to an external party with your customer’s PII.

Instead, enterprises must adopt tooling to protect themselves from the downside risk of agents, especially regulated enterprises that are subject to significant consequences if data is leaked.

The Three Tenets of Governance

This brings us back to governance. Enterprises need to protect themselves from agent mistakes; the remaining question is how? There are primarily three tenets:

- Access. Agents need to be designated access that doesn’t sidestep constraints that we’ve otherwise placed on humans, other servers, or devices. Generally speaking, an agent should always have an owner and inherit the same restrictions as that owner (alongside potentially more). This follows the principle of least privilege, where only the necessary permissions are provisioned for the agent.

- Auditing. Agent actions need to be monitored so that any breach or mistake could be traced by developers and recreated. With humans, you could simply message the company Slack and ask, ‘Who deleted this table?”—with agents, there is no reliable way to query history unless it’s deterministically monitored.

- Human-in-the-Loop. For highly sensitive actions, a human should manually grant an agent access after being presented with a clear summary of the action that’s being taken. This minimizes the odds of a destructive action (e.g. a full database drop).

Two of these tenets are simple: access and auditing. Access is a security field that's long pre-dated AI agents; AI agents are simply subject to the same RBAC, ABAC, ReBAC, or whatever access system of choice an enterprise as opted for. Auditing, meanwhile, is as simple as collecting information on every single thing that an agent does; auditing, too, has its roots in other previous systems, like network observability.

However, human-in-the-loop is more complex. Humans shouldn't have to approve everything—that would defeat the purpose of automation. At the same time, humans must approve of certain high risk actions. Accordingly, that requires its own framework, determining what does and doesn't require a human-in-the-loop.

When to Use Human-in-the-Loop?

The first thing to realize is that not all actions carry the same level of risk. Some are harmless, some can introduce operational friction if done incorrectly, and others can create real financial, legal, or compliance exposure.

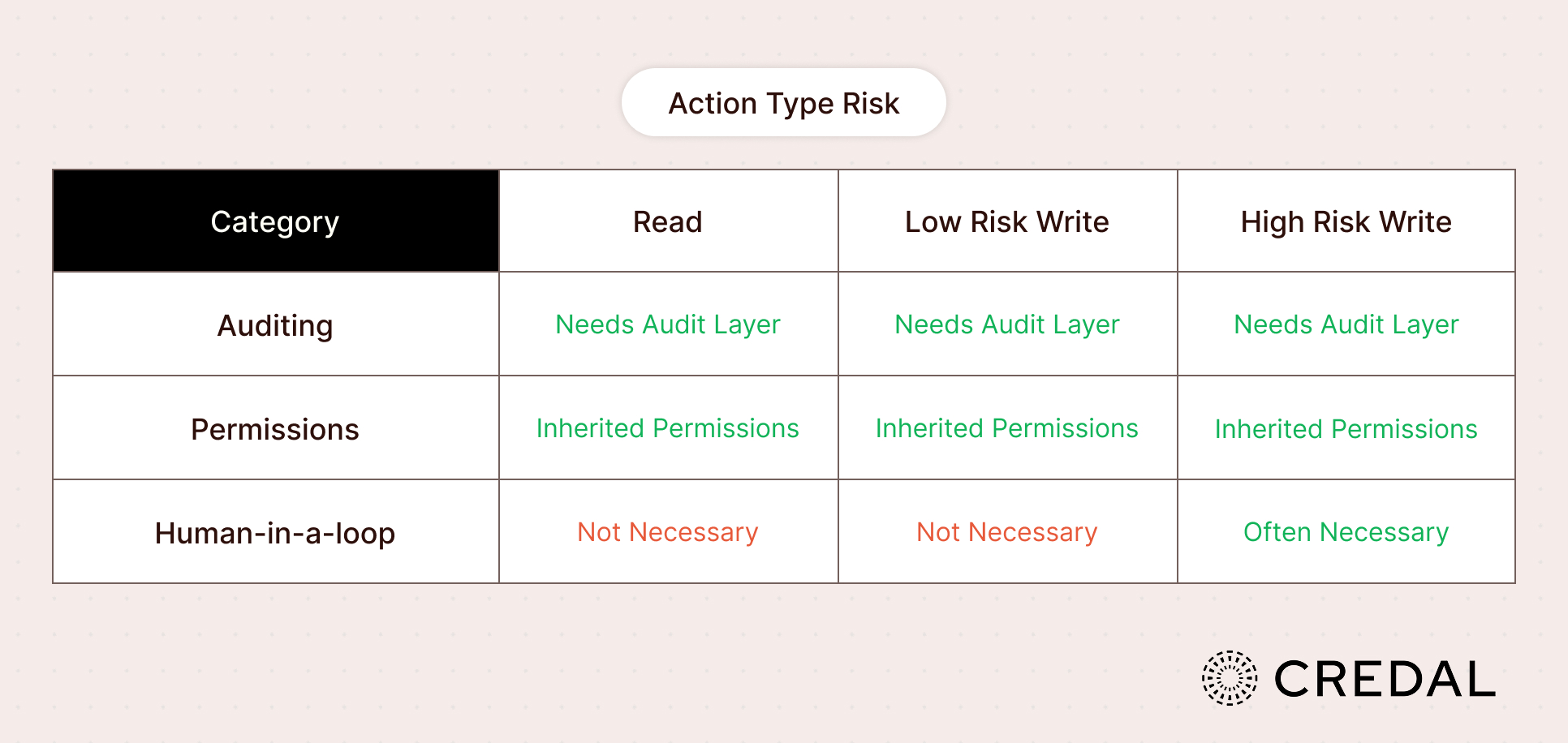

There are three categories of actions:

- Read-only (Lowest Risk)

- Low risk write

- High risk write

Let’s discuss how we should treat these categories distinctly.

Read Only Actions

For read only actions, the responsibility should flow to the human owner. Through a governance framework, the owner should designate access to the agent, never being able to designate broader access than they possess themselves.

Low Risk Write Actions

For low risk write actions, these should typically be allowed to proceed without human approval. As long as permissions and auditing are correctly configured, it would be more hindering than helpful for humans to approve every action.

High Risk Write Actions

For high risk write actions, however, enterprises should consider requiring an explicit approval.

Determining Low Risk versus High Risk.

Notably, it is the responsibility of the enterprise to draw the line between what constitutes a low risk versus high risk write action (e.g. it may be low risk to update a Salesforce record, but high risk to send payments). In the high risk case, accountability for the action will fall to the human approving. In the low risk case, accountability falls to the agent builder.

In larger or more regulated enterprises, it becomes increasingly necessary to centralize action governance—enterprises tend to codify their practices. This includes establishing definitions of high and low risk actions. After all, the enterprise needs to be able to show defensibility to a regulator when disclosing their agentic systems.

Establishing these categories is about giving enterprises a defensible, repeatable framework for governing agent actions. By drawing clear lines between read-only, low risk, and high risk writes, organizations can match the level of oversight to the level of risk, preserve user experience where possible, and step in with human judgment where it’s essential.

What is Credal?

Credal is an AI governance and orchestration platform that provides out-of-the-box managed agents with built-in auditing, human-in-the-loop, and permissions inheritance. Credal is the governance system and environment within which agents operate. However, products like Credal do not determine what is low risk versus high risk, and by extension, what actions need human-in-the-loop workflows. Rather, that responsibility falls on the organization.

If you are interested in learning more about Credal, sign up for a demo today.