What is Multi-Agent Orchestration?

Recently, we discussed how AI orchestration is “the process of automating and coordinating different AI models, agents, and systems to create complex, efficient, and scalable workflows and applications”. In particular, we explained how that involves seven tenets: (i) integrations, (ii) permissions, (iii) agency, (iv) memory, (v) human-in-the-loop, (vi) observability, and (vii) scalability.

Today, we want to zoom in on that final tenet, in particular exploring how multi-agent orchestration works.

What is a multi-agent system?

As the name implies, multi-agent systems are systems where AI agents work together towards a goal. It’s a relatively new frontier of AI computing—colloquially, most “agentic workflows” refer to limited single-agent, siloed processes.

Why are single AI agents not enough?

Agents are autonomous software entities that can perceive their environment, make decisions, and take actions to achieve specific goals. However, there is a limit to this approach. Agents are able to make decisions based on context, and at a massive enterprise, it’s easy for an agent to get lost in an overwhelming amount of context.

To visualize this, imagine if a single employee had to know everything about the company’s internal policies, data infrastructure, Salesforce records, engineering issue tracking, product data, and every single file in the Google Drive. They would not be able to function without feeling overwhelmed with information. AI agents are the same; they require context to perform, but with too much context, they’ll quickly conflate things.

Why multi-agent?

By having AI agents specialize in specific tasks, they can master their work with high accuracy. For instance, a single agent might perform if their sole job is to send emails. To be clear, sending emails involves multiple sources of context—the agent needs to know the company’s communication guidelines, the company’s product information for messaging, the company’s contacts for dispatching emails, and the company’s policies on sending too many emails at once. However, by focusing on just email, a single agent could be the effective mailman of the company.

Having specialized agents doesn’t limit what can be done with AI; in fact, it expands it. For instance, imagine a recruiting agent whose job is to screen resumes and schedule follow-up interviews. That recruiting agent is focused on different contexts: the company’s hiring philosophy, the company’s job openings, and hiring platform’s candidates. However, a recruiting agent might need to send an email to schedule a follow-up—to do so, instead of learning all the redundant information of the email agent, it’ll instead interface with the email agent. And just like that, we have an effective multi-agent workflow!

In a nutshell, multi-agent involves: (i) specialization over monoliths, avoiding single “do-everything” agents that drown in context, and (ii) cross-agent communication to get work done.

What are some examples of multi-agent workflows?

To visualize the impact of multi-agent workflows, let’s walk through a few examples. For the sake of readability, we’ll name each of the agents and mark them in italics.

Recruiting Workflow

Imagine a recruiting workflow to evaluate candidates and do the first round of screening.

For this workflow, an Applications Evaluator Agent interfaces with an Email Outreach Agent and a Scheduler Agent. The goal is for each candidate application to be evaluated, where promising candidates are outreached to book an interview. With multiple agents, the evaluator can focus on evaluations while the email and scheduler agents can ensure the company’s voice, letterhead, and calendars are used.

Customer Support Triage Workflow

Imagine a customer support workflow that triages issues so that humans can better manage their time.

This workflow involves an Intent Classifier Agent that directs issues to a Knowledge Base Retrieval Agent. Additionally, the Intent Classifier Agent delegates tasks to a Remediation Agent, which queries a Policy Guard Agent that’s the expert on the business’s policies.

The system operates on a publish/subscribe model for ticket updates and includes approval requirements for any destructive remediation actions, ensuring proper oversight of customer issue resolution.

FinOps / Revenue Operations Workflow

Imagine a revenue system that looks out for anomalies and notifies the right people.

A Report Compiler Agent works bidirectionally with an Anomaly Detector Agent and an Account Owner Notifier to maintain financial operations. This system implements rate-limited outreach to prevent overwhelming account owners and maintains detailed audit logs of all financial monitoring activities, creating a balanced approach to financial operations management.

So what’s the problem? Multiple agents dramatically increase the attack surface.

Earlier, we mentioned some of the seven tenets of AI orchestration. Some of these—in particular, permissions, memory, and observability—become especially more difficult when we have multiple agents.

Permissions and Memory

Permissions and Memory go hand-in-hand in the context of AI agents. In particular, they are interconnected from the standpoint of security.

A tenet of safe AI agents is for AI agents to inherit permissions of their dispatchers. If a user can see X, so can an agent. If a user cannot see Y, then neither can the agent, or else the agent might leak that information to the user!

This is made possible by permissions mirroring, but that becomes significantly more difficult for multi-agent orchestration. For example:

- Agent A was invoked by User A. Agent B was invoked by User B. User A and User B have different sets of permissions; if Agent A leaks information to Agent B, then User B will gain information they shouldn’t have!

- Additionally, communicating what permissions were provided to Agent A and Agent B needs to be cross-communicated to plan and prevent a leak.

- Finally, even if Agent A is prompted with context that’s exclusively permissible to Agent B, Agent A might still dig into previous context from its memory that wasn’t related to Agent B.

The solution? An AI Orchestration framework must ensure that Agent A and Agent B don’t “gossip” information. Agent A and Agent B should only share information that they both have mutual access to. That involves also not tapping any previous memory that might have contextual leaks.

A framework to achieve this involves four specific rules:

- Memory partitions must be used: Memory should be tagged per‑agent, per‑team, and shared/global—with policy‑gated access.

- Purpose‑bound queries: Agents should fetch only what their current task allows.

- Redaction/transform hooks: Data should be sanitized before sharing.

- Provenance tags: Every memory item should carry a source, owner, and ACL.

Another strategy is to leverage a human in the loop where an agent needs to get permission from a human before it accesses another agent it hasn’t worked with before. However, that’s an additional safety measure—it does not replace any of the tenets above.

You might be wondering if this constraint hurts the efficacy of AI agents. In short, it does. However, it is up to the organization to use an AI orchestration solution to craft permissions mirroring in a way that’ll maximize context and collaboration without triggering a leak. It’s a non-negotiable constraint, especially with compliance standards like GDPR, PCI DSS v4, and CCPA imposing penalties if data custody requirements are not followed.

Observability

Observability for single agents is simple: an agent’s reads and writes just need to be tracked. However, with multi-agents, observability needs to monitor and visualize paths between agents.

This is akin to how we trace requests in conventional systems. Modern observability is a system of stack traces where each request is monitored for the subsequent requests it triggers, percolating through a system. The same is needed for AI agents, where we need to track who called whom, with what context, and what tools or actions were invoked.

To be effective, these paths need to include trace IDs, spans per agent/action, decision logs (including permission checks), data access logs, and cost/latency metrics.

Observability data should be easily explored through two views: (i) one oriented on traces to debug individual sessions and (ii) another that provides topological analysis of who collaborates with whom, what hot paths are common, and identification of bottlenecks.

Why do we care?

- Compliance: Observability is necessary to meet broad security tenets of SOC 2 and PCI DSS that require us to trace any sensitive information that might bubble through a system.

- Security. In the case of an incident, we can trace what happened.

- Debugging. Only with a trace can developers exactly pinpoint why something might’ve succeeded or failed.

Challenge: Agents need to be able to communicate

Observability, memory, and permissions are problems that single agents and multi-agent workflows share. However, getting agents to actually work together is a uniquely multi-agent problem.

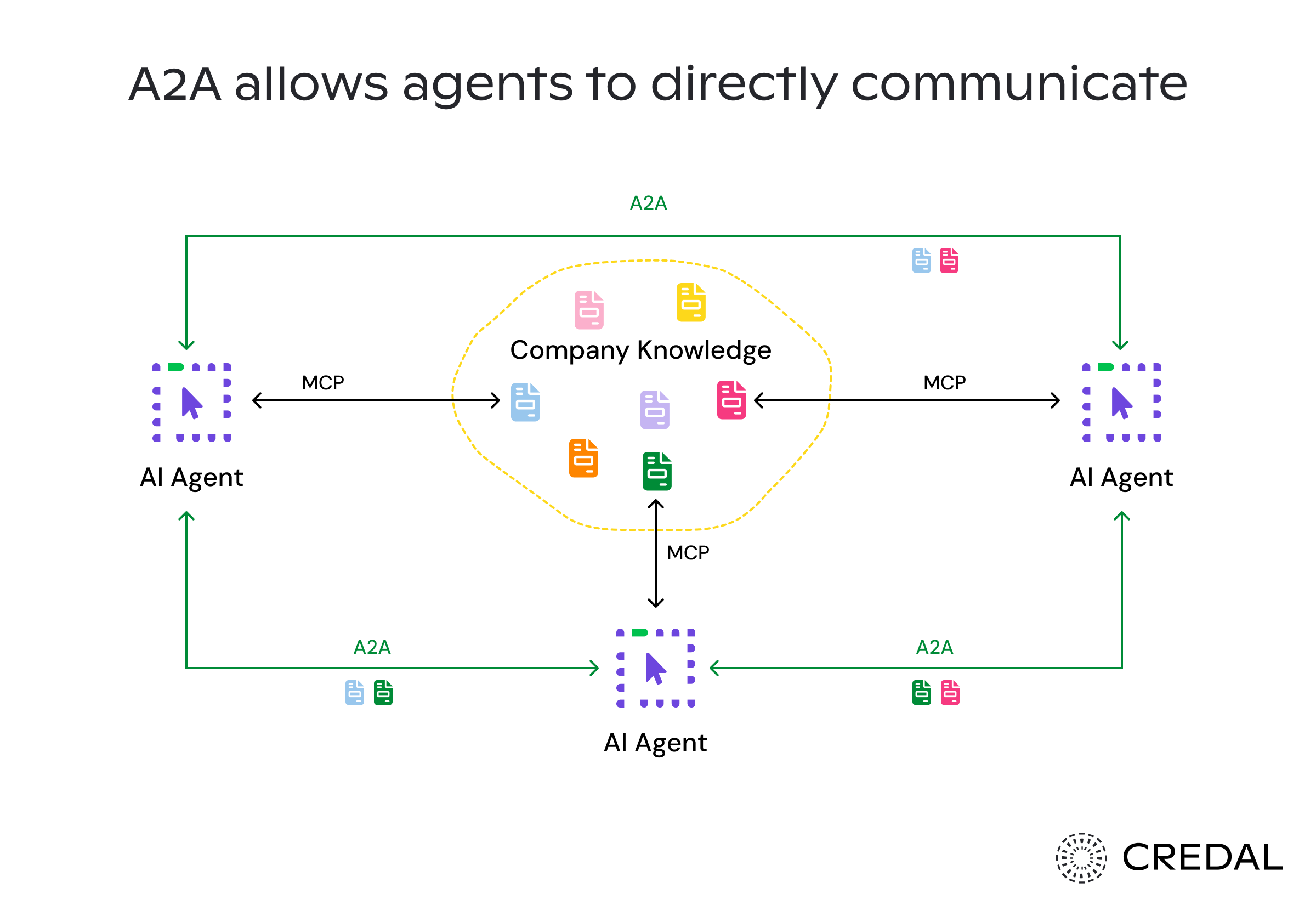

A2A

A2A (Agent-to-Agent) is a protocol developed by Google that enables effective communication between AI agents in multi-agent systems. The goal of A2A is to standardize how agents interact with each other through structured message formats that include task specifications, context pointers, and correlation IDs.

When agents work together, familiar network problems emerge: retries, timeouts, and idempotency (a guarantee that duplicate actions don’t happen). A2A prevents these by providing a shared protocol between agents, where communication breakdowns are gracefully rectified.

Ideally, a multi-agent orchestration platform needs to support A2A communication—or a competitive framework with similar features—to ensure intra-agent communication happens without breakage.

Discovery

Another problem is actually connecting agents together. Manually connecting agents is slow and forces humans to do a lot of work—ideally, multi-agent workflows don’t just turn into fancy Zapier chains. That’s ideal for certain deterministic needs, but agents should have the capacity to discover information and act. This is particularly important for problems that require deep research (e.g. determining if sales email meets compliance and sales doctrines), where information might be located in various disconnected locations.

A strong multi-agent orchestration platform should be able to expose agents to each other so that they could freely work together. In other words, an orchestration platform should have an agent registry, where each agent has a profile with clear documentation on its purpose.

Orchestrator Agent

Some multi-agent workflows operate best with an orchestration agent that’s in charge of connecting agents together and delegating work. You can think of an orchestrator agent as the equivalent of a human manager. Their job is to streamline the discoverability problem and minimize redundancy by acting as a source of truth. This is sometimes referred to as a hub and spoke model.

Technically, an orchestrator agent operates the same as any other agent—using A2A or an equivalent to communicate with other agents, saving memory to maintain context, and running on an LLM under-the-hood. Accordingly, an AI orchestration platform’s role is minimal here—however, a good AI orchestration platform would make it easy to locate and triage an orchestrator agent’s data as that’s the central interest of any developer.

Challenge: Cost and Performance Controls

As multi-agent systems scale, so do costs. Agents use LLMs under-the-hood, which typically have token costs, and complex multi-agent workflows might involve non-trivial API requests and data throughput.

To minimize the likelihood of an outage, an AI orchestration platform should automatically flag whenever a threshold is crossed so that a human could evaluate the risk.

How Credal is approaching this

Credal is an AI orchestration platform that was purpose-built for scalable AI operations. As a result, multi-agent orchestration is a critical guidepost of Credal’s design. With Credal, you can launch managed agents with ready-access to tools, including pre-built tools that integrate with third-party data sources like Snowflake, Dropbox, or Google Drive. Credal supports multiple agents; the platform makes it easy for agents to discover and work together.

Multi-agent workflows are naturally complex and require care. If you are interested in discovering how Credal works, please sign up for a demo today!